List of content you will read in this article:

In general, most people assume that their bandwidth is their internet speed, but that is not true. It is the amount of data they can receive in a second. For example, if your internet provider offers you 100Mbps, it does not mean that you will have a download speed of 100 megabits per second, but that you could receive 100 megabits every second with your connection. Your actual internet speed comes from a combination of bandwidth and latency. If you want to know more about latency, then read this guide on "what is latency and How to reduce it" quickly.

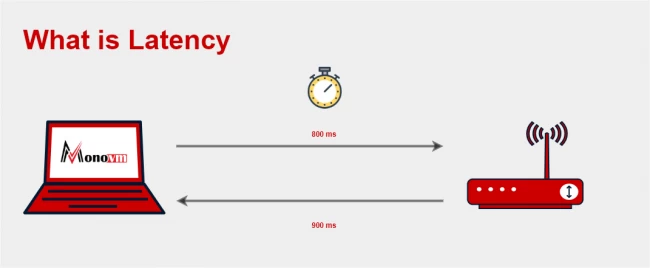

What is Latency

Latency is defined as the amount of time it takes to send information from one machine to another. It could also be described as the delay between when the information gets sent from the server and when the client receives it. Latency is most commonly measured in milliseconds (ms) and could be referred to as the ping rate when running speed tests.

Bandwidth Vs Latency: Difference

The best way to explain both these concepts is to visualize data flow through cables as water flowing through pipes.

- Latency has to do with the water in the pipe and how fast it moves through the pipe. In other words, latency works to determine how fast the contents in the pipe can be given from the client to a specific server and back.

- Bandwidth has to do with the pipe's width, determining the amount of water that can move through the pipe at a particular time.

From this example, we can conclude that latency is a way to measure speed and bandwidth, but there is an essential correlation between bandwidth and latency.

Bandwidth Vs Latency: Relationship

There is a cause and effect relationship between latency and bandwidth, meaning one will affect how the other functions, and ultimately your internet speed. More precisely, the amount of bandwidth you have may significantly affect the latency you get, as low bandwidth will cause congestion (i.e., more data is being sent than the bandwidth allows, causing queuing), which in turn would increase the latency. It could be easily illustrated with a comparison between a small one-lane road and a highway. If 20 cars are going through a 5-lane highway, they will arrive faster to the destination than if 20 cars were driving through a 1-lane road.

What is an Acceptable Latency

The perfect latency figure is 0ms, but it is physically impossible as no medium transmits data faster than fiber optic cable, which we currently use to transfer data over long distances. The average latency of fiber optic cable is 4.9 microseconds per kilometer (0.0049ms/km). However, it could be increased based on cable imperfections. The acceptable latency figure is relative to the use case of the internet connection:

- If you are streaming video, maximum latency should be around 4 to 5 seconds (4000 – 5000ms)

- If using VoIP (voice over IP), the latency should be much lower, at about 150-200ms.

- If playing games online, the desired latency figure is even lower, at 30-50ms.

What factors affect latency

Besides bandwidth, which we discussed previously, two main factors affect latency:

- The main factor is the distance between the client and the server. Since data takes time to transverse distances, the further you are away from the data source, the longer it will take for it to reach your machine.

- The second factor is congestion, which goes hand in hand with bandwidth. The lower the bandwidth of your connection, the higher is the chance of you experiencing congestion.

Wi-Fi Latency

Wi-Fi indeed offers good internet, but the wireless signals are more responsive to noise. In other words, the packet data of your internet requires to get re-sent if packet loss occurs. Wi-Fi also requires jumping through other factors like protocols or encryption for traveling to and from a computer. Usually, wireless signals fade or lose the connection faster than any ethernet connection over the distance.

Router

At last, an older router can create multiple issues in your internet connection. The older router generally doesn't support the latest version of internet settings, and many people are connected to that router, which can cause issues in latency.

Wi-Fi vs. Ethernet cable

Latency can also be affected by an ethernet cable that is connected to your internet, so try to change the cable to reduce the latency.

Website Related Issues

There are websites on the internet that require huge load time as they consist of HD images, HD graphics, or GIF. Hence, it occurs latency issues, so make sure to check these website's information to find the solutions for it.

How to Reduce Latency

If you are the end-user, then there is practically no way for you to reduce latency other than using servers nearest to you geographically and using your internet connection solely for the task at hand, reducing congestion that could have been caused by other processes using the connection. For example, if you wish to download a large file from the internet as fast as possible, try not to use the same connection to watch youtube, which takes up bandwidth from the download. As a server admin, you could use the following techniques:

- HTTP/2

- Fewer External HTTP requests

- Using a CDN

- Using Prefetching Methods

- Browser Caching

Apart from the above techniques, you can use some quick ways, so here are some ways to reduce latency easily:

Check the internet connection speed

If you face latency issues, check the internet connection as it occurs major latency related issues for the system. As per the requirement, you need almost 15 to 20 Mbps to play games easily by eliminating latency issues.

Router's Position

If your system is far from the router, try to shift your system to the router. As we have mentioned earlier, the distance affects the latency, so try to reduce the distance between router and system to eliminate latency related issues.

Close the Background Programs or Website

Various bandwidth-heavy sites can easily affect the latency of your system. Hence it is good to close these programs or websites to reduce the latency. If you are using YouTube or Netflix and face latency related, then close them quickly.

Restart or Replace Router

As a quick solution, restart your router because excess usage of the router can create issues, so it is better to restart it for refreshing the internet connections to improve latency.

If your router has regular issues and it is an older router, then you must replace it to improve the reliability and speed of your connections. There is a feature called Quality of Service (QoS) that allows a user to prioritize the internet traffic on the console for improving latency.

Conclusion

So this is how you can reduce latency easily, and if you are facing latency issues but you don't know the reasons for it, then you can understand it by reading our article. As we have mentioned earlier, latency plays a major role in loading the information from one system to another. Hence it is essential to have low latency for transferring the information in real-time, and our article has all information to reduce latency easily.